Singularity

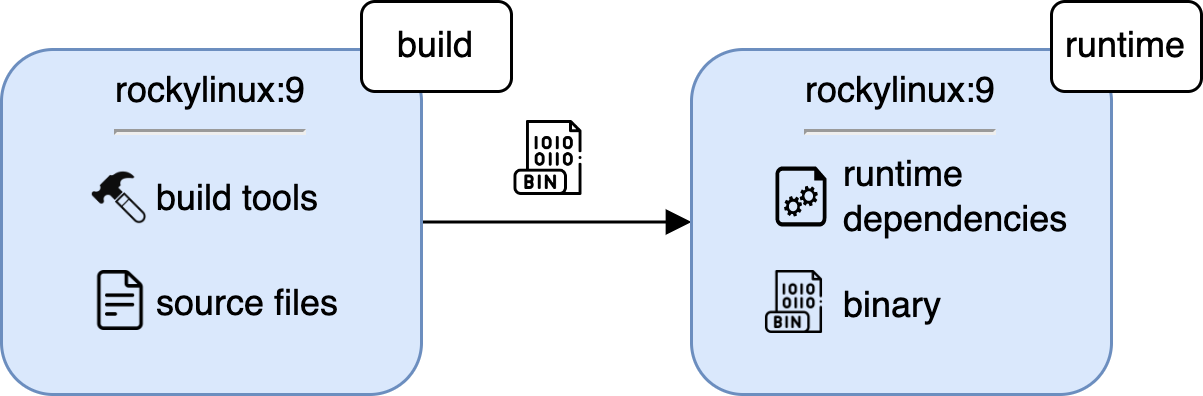

We chose the container technology Singularity to package our software together with all its dependencies. Containers are a virtualization technique allowing the provision of encapsulated runtime environments. This allows us to circumvent a common problem with the usage of HPC systems. Users usually lack the permissions to install additional software on the HPC system themselves. Containers solve this issue by providing a pre-built environment containing everything the user needs to execute their application. Unlike virtual machines, containers share the operating system kernel with the host machine, making them a lightweight solution with less performance overhead. Singularity in particular was designed specifically for scientific and high-performance computing workloads. It is therefore able to easily utilize HPC-specific software and hardware components like MPI or GPUs. To produce our container, we use a multi-stage build process, with separate build and runtime stages. We install all the necessary dependencies to compile the application in the build stage. The runtime stage only includes the dependencies required to run the software. This approach helps ensure that the container is as lightweight as possible while still containing all the necessary components for accurate and reliable results.

MPI Integration

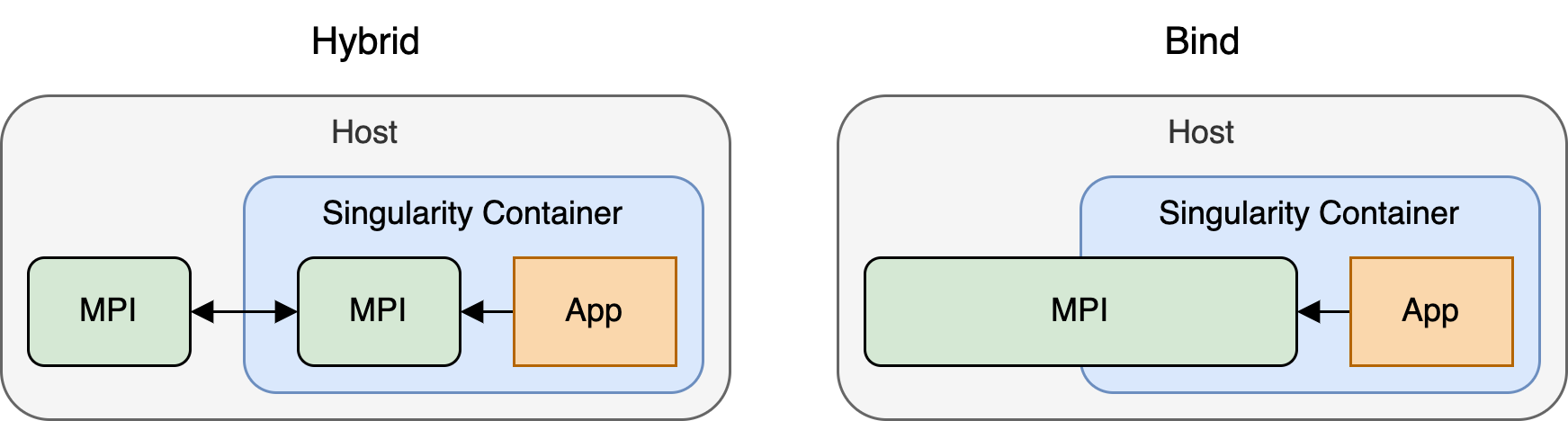

When it comes to integrating with MPI, two different approaches can be applied. The first approach is the ”Hybrid Model”, where MPI is installed in the container as well as the host system. When run, the MPI instance of the host will communicate with the MPI installation inside the container. The second approach is the ”Bind Model”, where the host’s MPI installation is mounted into the container. The latter approach is more performant as there is less communication overhead. However, the solution is less portable and therefore makes it harder to reproduce results as the container is not executable on its own anymore due to the lacking MPI installation.

Image Definition

In the following, we demonstrate the image definition for a container using the MPI Bind Model. The definition file specifies two stages, build and runtime, with the base image rockylinux, which was used due to being the unofficial successor to the discontinued CentOS that runs on the HPC cluster used during testing. In the first section, build, we use the %files section to copy the source files of the example C++ application to the container file system. The following %post section installs the build dependencies and compiles the application in a build directory.

BootStrap: docker

From: rockylinux:9

Stage: build

%files

laplace2d/src src

laplace2d/CMakeLists.txt CMakeLists.txt

%post

yum update -y && \

yum group install -y "Development Tools" && \

yum install -y mpich mpich-devel cmake && \

source /etc/profile.d/modules.sh && \

module load mpi && \

mkdir build && cd build && cmake .. && makeIn the runtime stage, we first copy the build directory from the previous stage.

The %post section then installs the runtime dependencies for the application.

Note that we do not install MPI during this step as we are using the Bind-Model for this container.

The %environment section adjusts the PATH and LD_LIBRARY_PATH environment variables so that the mounted MPI from the host system can be found when executing the container.

Finally, the %apprun section specifies the application to be launched.

BootStrap: docker

From: rockylinux:9

Stage: runtime

%files from build

build build

%environment

export MPI_DIR=/cluster/mpi/mpich

export PATH="$MPI_DIR/bin:$PATH"

export LD_LIBRARY_PATH="$MPI_DIR/lib:$LD_LIBRARY_PATH"

%post

yum update -y && \

yum install -y gcc-toolset-12 compat-libgfortran-48

%apprun laplace

/build/bin/laplaceGitLab CI Job

Using the definition file from the previous section, we can define a job that builds the image in a CI pipeline on every commit to the repository. We use a Docker image with Singularity installed to run the job. To build the Singularity image, we run Singularity’s build command in the script section. The produced image is then stored as an artifact for use in later jobs.

build-singularity-mpich-bind:

image:

name: quay.io/singularity/singularity:v3.10.4

entrypoint: [""]

stage: build

artifacts:

paths: ["rockylinux9-mpich-bind.sif"]

script:

- |

singularity build \

"rockylinux9-mpich-bind.sif" \

"rockylinux9-mpich-bind.def"