Workflow Implementation for Regression Tests

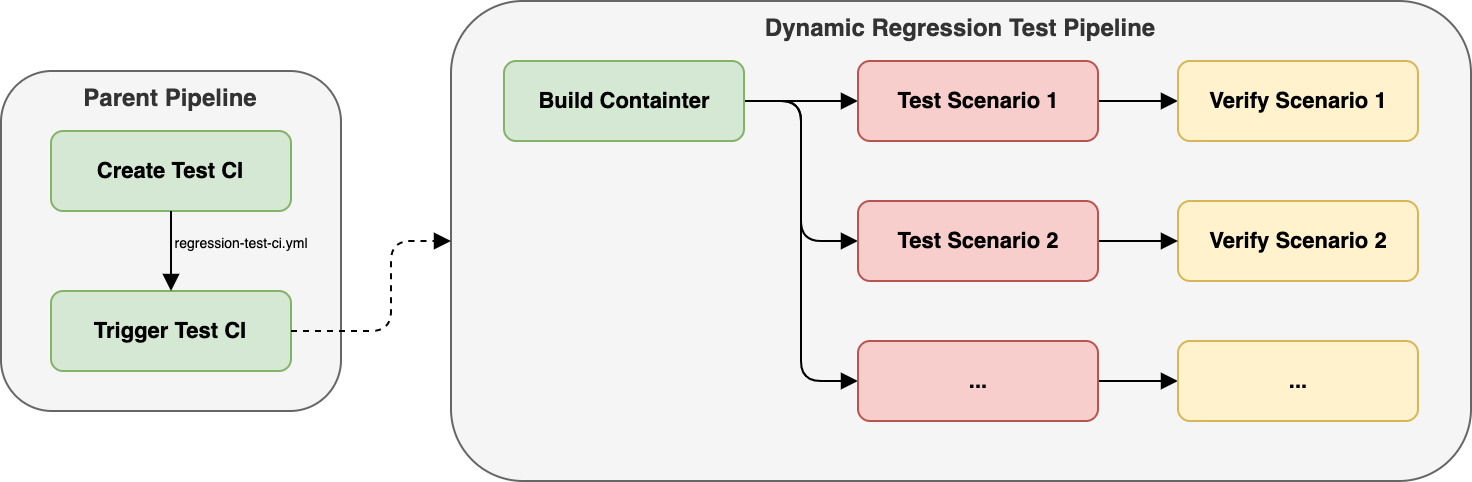

In sections Singularity, HPC-Rocket and Fieldcompare we described the single components of our workflow and how the CI jobs can be composed. In this section, we describe how we arrange a whole regression test suite for our sample application that can be easily extended with new test cases without modifying the workflow.

Creating a new test CI-pipeline

In order to run our regression tests, we implemented the Python module jobgeneration to dynamically generate a new CI workflow. The Python module will automatically pick up any file in a test directory ending in -test. Using a template file, new GitLab CI jobs with HPC-Rocket and Fieldcompare instructions will be generated for each of the collected test files. The first job create-test-ci is responsible for creating the new CI pipeline YAML file regression-test-ci.yml by executing the jobgeneration module and will upload the result as an artifact.

create-test-ci:

image: python:3.10

stage: test

before_script:

- pip install -r jobgeneration/requirements.txt

script:

- python3 -m jobgeneration test

artifacts:

paths:

- generated/Another job, trigger-test-ci, downloads the generated CI pipeline file and uses it to launch a child pipeline for the regression tests.

trigger-test-ci:

stage: test

needs:

- create-test-ci

trigger:

include:

- artifact: generated/tests-ci.yml

job: create-test-ci

strategy: depend

variables:

PARENT_PIPELINE_ID: $CI_PIPELINE_IDThe creation of the new CI pipeline file is realized by evaluating a jinja template. For each test scenario, two jobs are created. The first job runs the actual test scenario using \hpcrocket while the second job performs the verification with Fieldcompare. A shortened version of the template is shown in the listing below:

{% for test_case in test_cases %}

run-{{ test_case }}:

stage: simulation

script:

- hpc-rocket launch --watch tests/rocket.yml

# ...

verify-{{ test_case }}:

stage: verify

needs: ["run-{{ test_case }}"]

script:

- fieldcompare file results/TemperatureField.avs reference_data/{{ test_case }}.avs

# ...

{% endfor %}Adding a new test requires the addition of a Slurm file to the tests subfolder. The Python jobgeneration script simplifies the process further by automatically scanning through all the Slurm scripts and generating two new CI jobs to execute HPC-Rocket and Fieldcompare as described above.